EGBAS

An Efficient Explorative Sampling Considering the

Generative Boundaries of Deep Generative Neural Networks

Giyoung Jeon*, Haedong Jeong* and Jaesik Choi**

UNIST and KAIST

* Both authors contributed equally to this research.

** Corresponding Author

Deep generative neural networks (DGNNs) have achieved realistic and high-quality data generation. In particular, the adversarial training scheme has been applied to many DGNNs and has exhibited powerful performance. Despite of recent advances in generative networks, identifying the image generation mechanism still remains challenging. In this paper, we present an explorative sampling algorithm to analyze generation mechanism of DGNNs. Our method efficiently obtains samples with identical attributes from a query image in a perspective of the trained model. We define generative boundaries which determine the activation of nodes in the internal layer and probe inside the model with this information. To handle a large number of boundaries, we obtain the essential set of boundaries using optimization. By gathering samples within the region surrounded by generative boundaries, we can empirically reveal the characteristics of the internal layers of DGNNs. We also demonstrate that our algorithm can find more homogeneous, the model specific samples compared to the variations of \( \epsilon \)-based sampling method.

Contribution

- We propose an efficient, explorative sampling algorithm to reveal the characteristics of the internal layer of DGNNs.

- We first approximately find the set of critical boundaries of the model with given query using Bernoulli dropout approach.

- We efficiently obtain samples which share same attributions as the query in a perspective of the trained DGNNs by expanding the tree-like exploring structure.

- Our algorithm guarantees sample acceptance in high dimensional space and handles sampling strategy in a perspective of the model with considering complex non-spherical generative boundaries.

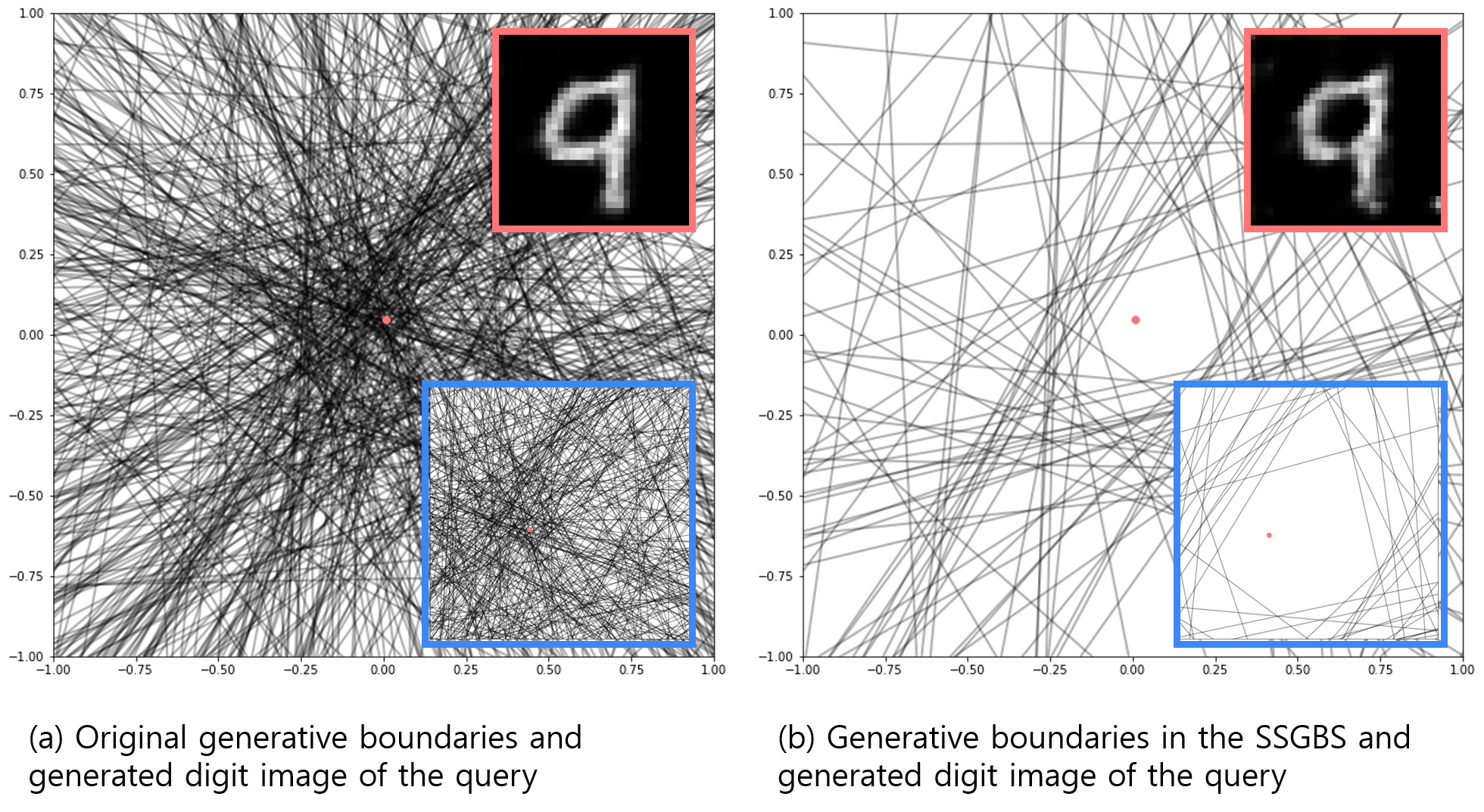

Smallest Supporting Generative Boundary Set

Decision boundaries have an important role in classification task, as samples in the same decision region have the same class label. In the same context, we manipulate the generative boundaries and regions of the DGNNs. Specifically, we want to collect samples that are placed in the same generative region and have identical attributes in a perspective of the DGNNs. We believe that not all nodes deliver important information for the final output of the model as some nodes could have low relevance of information. Furthermore, it has been shown that a subset of features mainly contributes to the construction of outputs in GAN. We define the smallest supporting generative boundary set (SSGBS), which minimizes the influences of minor (non-critical) generative boundaries.

We define the smallest supporting generative boundary set (SSGBS), which minimizes the influences of minor (non-critical) generative boundaries.

$$SSGBS=\arg\min_{S\subseteq N} |S| + \lambda\mathbb{E}_{z\in GR(S)}\left[\max(\left\Vert G(z)-G(z_0)\right\Vert, \delta)\right]$$

where \( N \) is a set of all boundaries and \( GR(S) \) is a region surrounded by boundaries in \( S \). We control the trade-off between the number of boundaries and the quality maintenance by \( \lambda \). Formal definitions related to SSGBS are described in the paper.

Explorative Generative Boundary Aware Sampling

After obtaining Smallest Supporting Generative Boundary Set and its corresponding region, we gather samples in the region and compare the generated outputs of them. Because such region possesses a complicated shape, simple neighborhood sampling methods such as \(\epsilon\)-based sampling cannot guarantee exploration inside the region. To guarantee the , we apply the Generative Boundary constrained exploration algorithm inspired by the rapidly-exploring random tree (RRT) algorithm, which is invented for the robot path planning in complex configuration spaces.

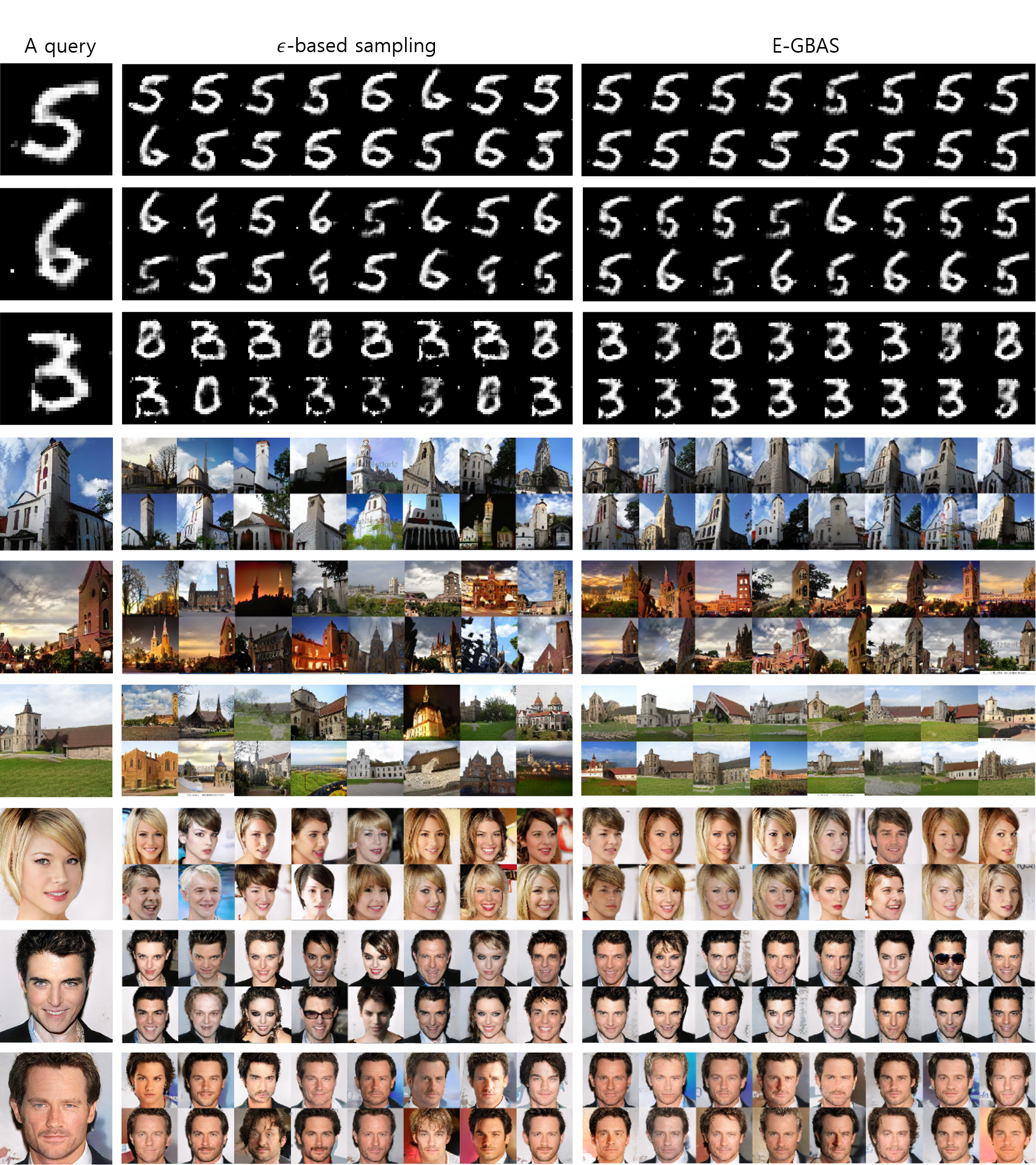

Our algorithm guarantees sample acceptance inside the target generative region, where other distance based methods obtains out-of-region samples.

Experiments

We conduct our algorithm on three models, DCGAN trained on MNIST dataset, PGGAN trained on Celeb-A and LSUN dataset.

Citation

Giyoung Jeon, Haedong Jeong, and Jaesik Choi. “An efficient explorative sampling considering the generative boundaries of deep generative neural networks.” Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 34. No. 04. 2020.

Bibtex

@inproceedings{jeon2020efficient,

title={An efficient explorative sampling considering the generative boundaries of deep generative neural networks},

author={Jeon, Giyoung and Jeong, Haedong and Choi, Jaesik},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence}, volume={34}, number={04}, pages={4288–4295},

year={2020}

}

Acknowledgement

This work was supported by the Institute for Information & communications Technology Planning & Evaluation (IITP) grant funded by the Ministry of Science and ICT (MSIT), Korea (No. 2017-0-01779, XAI) and the National Research Foundation of Korea (NRF) grant funded by the Korea government(MSIT), Korea (NRF-2017R1A1A1A05001456).