Author

pail

Jiyeon Han (PhD student) is invited to present at Ai4 Summit 2024 AI Research Summit https://app.swapcard.com/widget/event/ai4-2024/person/RXZlbnRQZW9wbGVfMzE1NzkwMjg=

Kyowoon Lee successfully defended his PhD thesis, Learning to Achieve Goals via Curriculum and Hierarchical Reinforcement Learning. Congratulations Dr. Lee!

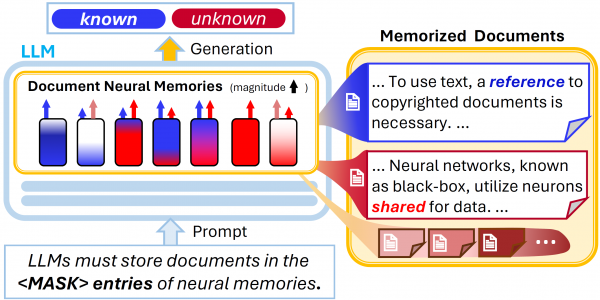

A paper, Memorizing Documents with Guidance in Large Language Models, written by Bumjin Park and Jaesik Choi is accepted at IJCAI-2024. 1. Problem This work tackles the problem of storing document-wise memories in LLMs. 2. Proposed Method This work… Continue Reading →

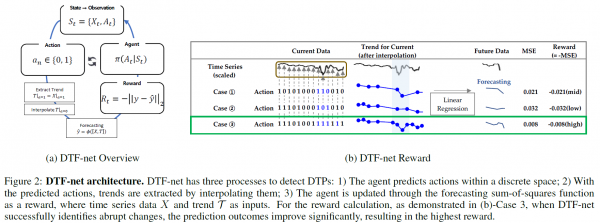

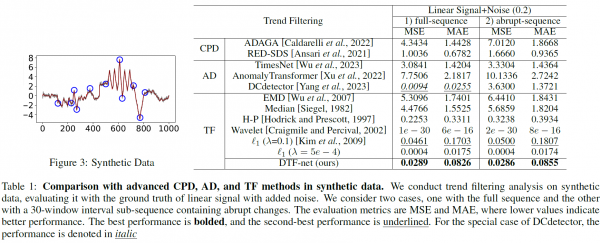

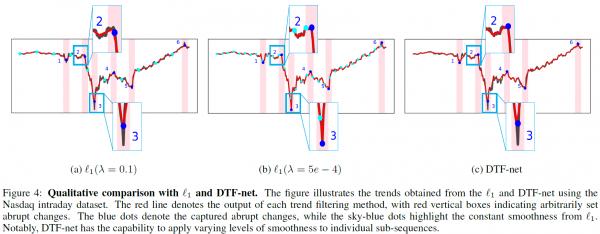

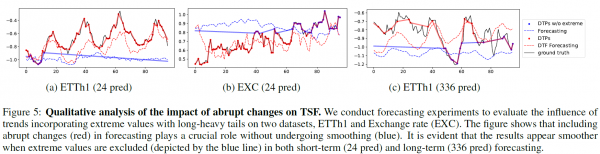

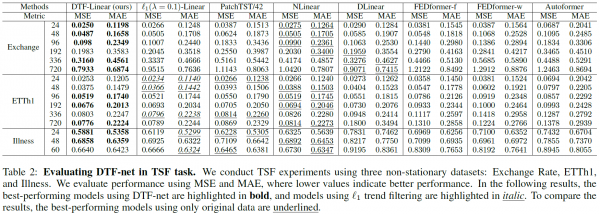

A paper, Towards Dynamic Trend Filtering through Trend Points Detection with Reinforcement Learning, written by Jihyeon Seong, Sekwang Oh and Jaesik Choi is accepted at IJCAI-2024.

Dr. Anh Tong (the first PhD graduate of SAIL) joins the Graduate School of AI at Korea University as an assistant professor. Congratulate Prof. Tong! http://xai.korea.ac.kr/teacher/teacher

A paper, Explainable Artificial Intelligence (XAI) 2.0: A manifesto of open challenges and interdisciplinary research directions, is accepted at Information Fusion.

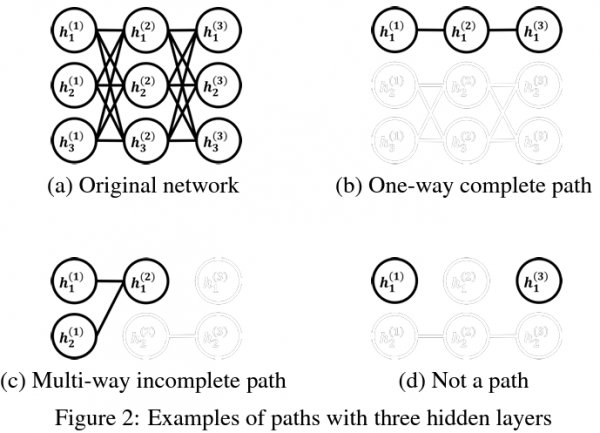

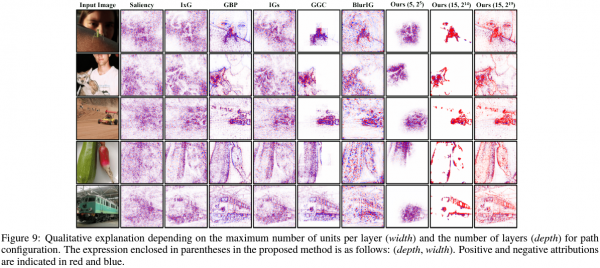

A paper, Pathwise Explanation of ReLU Neural Networks, written by Seongwoo Lim, Won Jo, Joohyung Lee and Jaesik Choi, is accepted at AISTATS-24.

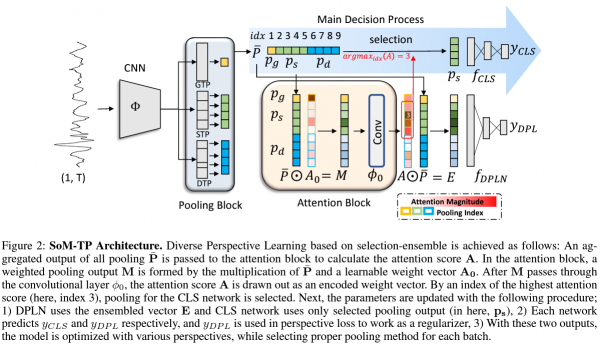

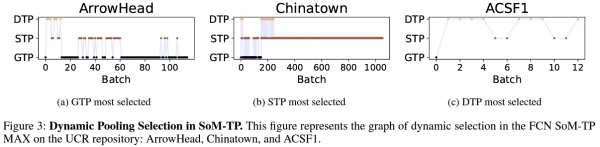

A paper, Towards Diverse Perspective Learning with Select over Multiple Temporal Poolings, written by Jihyeon Seong, Jungmin Kim and Jaesik Choi is accepted at AAAI-24.

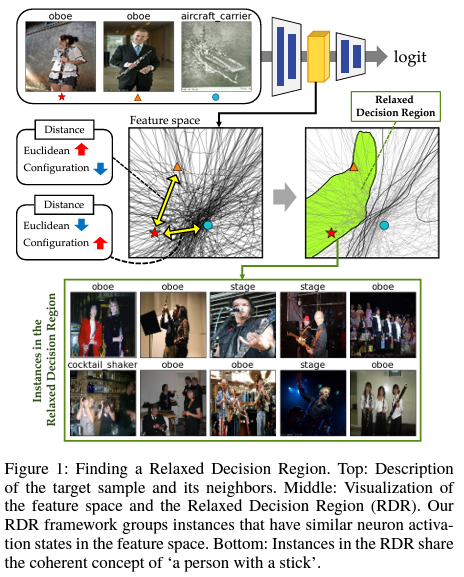

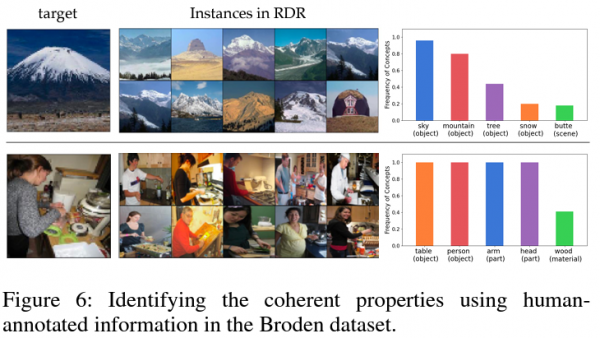

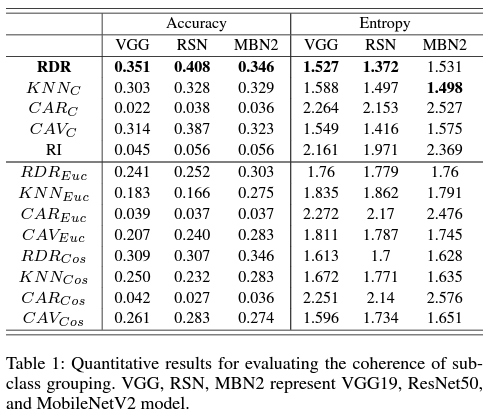

A paper, Understanding Distributed Representations of Concepts in Deep Neural Networks without Supervision, written by Wonjoon Chang, Dahee Kwon and Jaesik Choi is accepted at AAAI-2024.

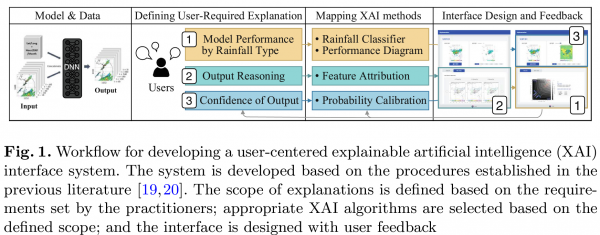

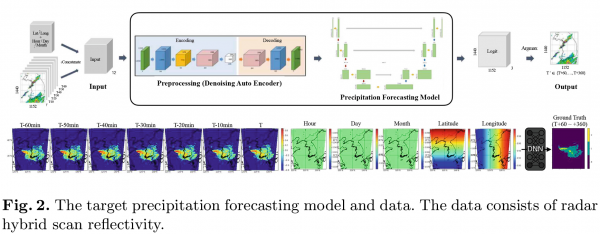

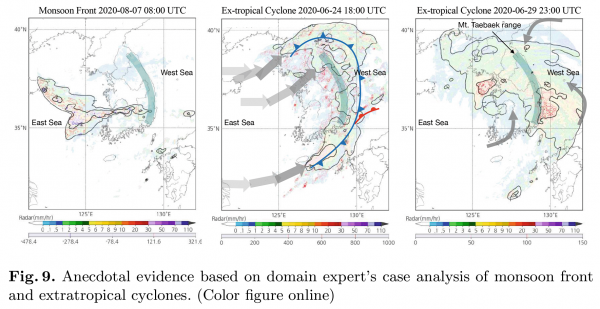

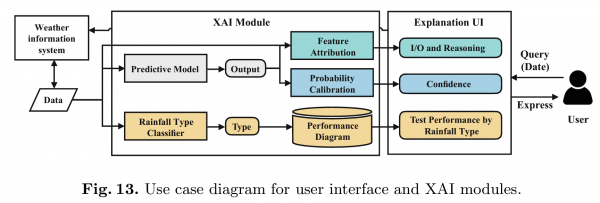

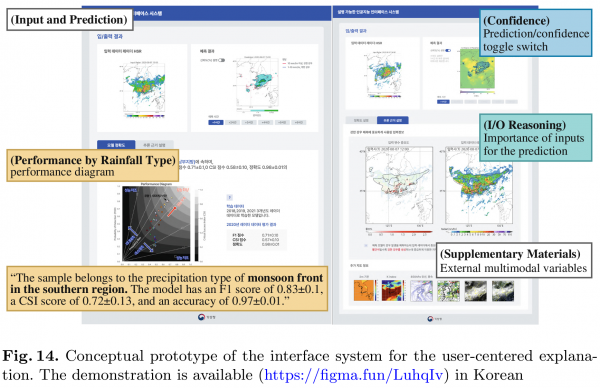

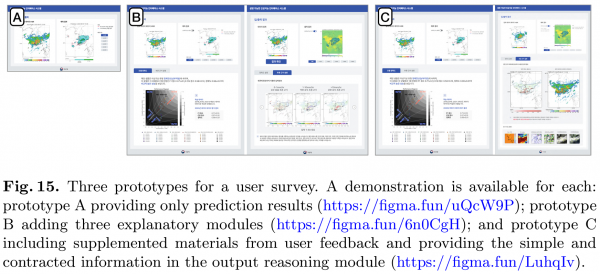

Explainable AI-Based Interface System for Weather Forecasting Model written by Soyeon Kim, Junho Choi, Yeji Choi, Subeen Lee, Artyom Stitsyuk, Minkyoung Park, Seongyeop Jeong, You-Hyun Baek and Jaesik Choi is presented at International Conference on Human-Computer Interaction International (HCII), 2023…. Continue Reading →