Author

pail

– Ye Eun Chun, Sunjae Kwon, Kyunghwan Sohn, Nakwon Sung, Junyoup Lee, Byoung Ki Seo, Kevin Compher, Seung-won Hwang and Jaesik Choi, CR-COPEC: Causal Rationale of Corporate Performance Changes to learn from Financial Reports, Findings of EMNLP, 2023. – Cheongwoong… Continue Reading →

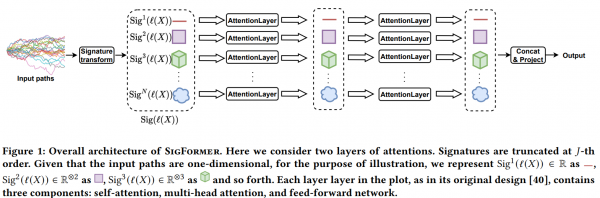

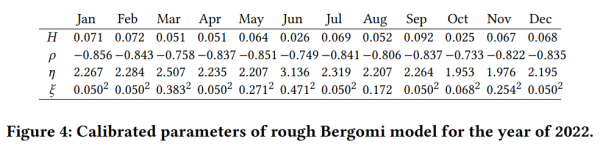

A paper, SigFormer: Signature Transformers for Deep Hedging, written by Anh Tong, Thanh Nguyen-Tang, Dongeun Lee, Toan Tran and Jaesik Choi is accepted at ACM ICAIF 2023.

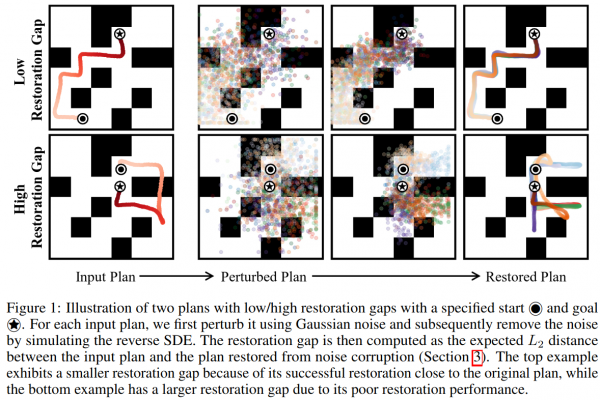

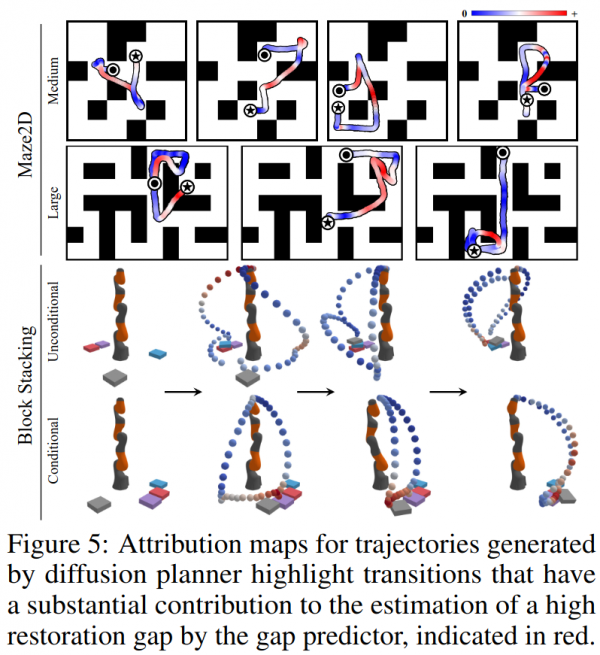

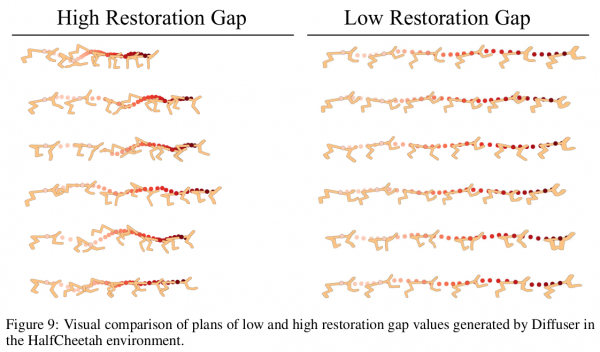

A paper, Refining Diffusion Planner for Reliable Behavior Synthesis by Automatic Detection of Infeasible Plans, written by Kyowoon Lee, Seongun Kim and Jaesik Choi is accepted at NeurIPS-2023.

Dear potential candidate of the lab (SAIL@KAIST) and the center (XAI@KAIST), Recently we have received many applications for the graduate studentship positions at KAIST Kim Jaechul Graduate School of AI and the researcher positions at Explainable Artificial Intelligence Center at… Continue Reading →

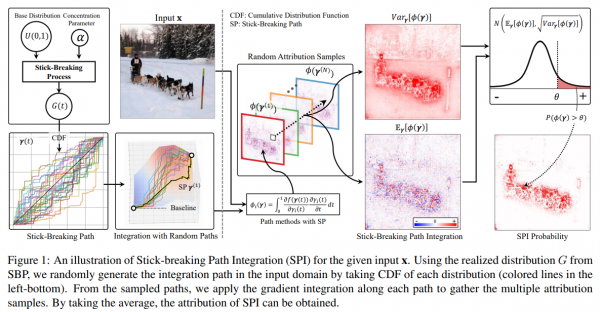

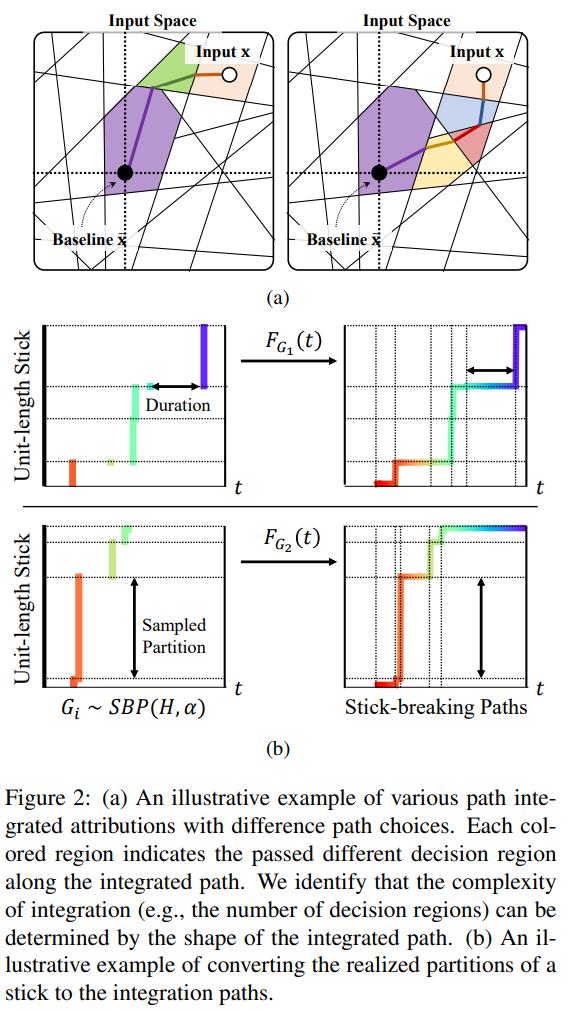

A paper, Beyond Single Path Integrated Gradients for Reliable Input Attribution via Randomized Path Sampling, written by Giyoung Jeon, Haedong Jeong and Jaesik Choi is accepted at ICCV-2023.

Seongun Kim, a PhD student of our lab, presented at Explainable Robotics Workshop at ICRA 2023 as an invited speaker.

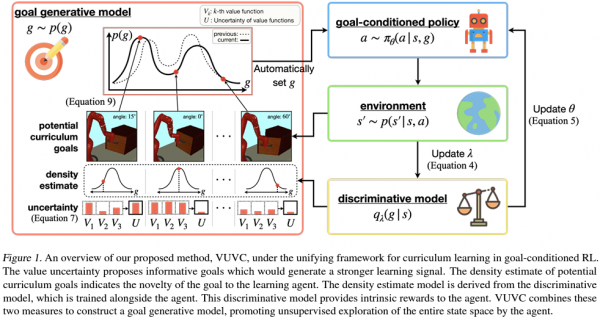

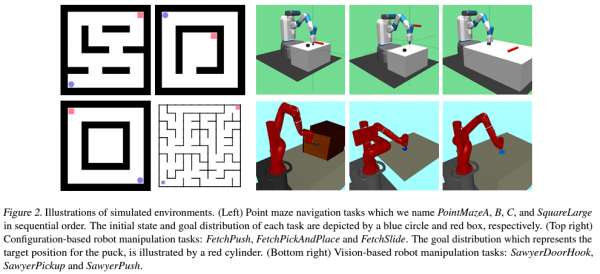

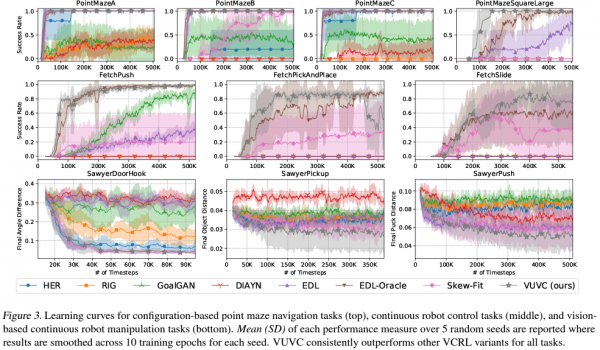

Our paper, Variational Curriculum Reinforcement Learning for Unsupervised Discovery of Skills, written by Seongun Kim, Kyowoon Lee and Jaesik Choi is accepted at ICML-23.

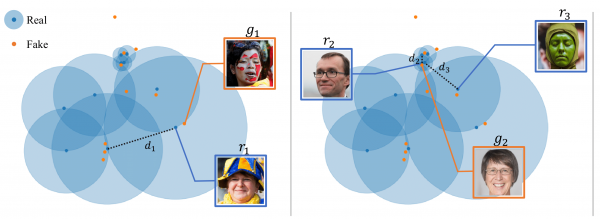

Our paper, Rarity Score: A New Metric to Evaluate the Uncommonness of Synthesized Images, written by Jiyeon Han, Hwanil Choi, Yunjey Choi, Junho Kim, Jung-Woo Ha and Jaesik Choi is accepted at ICLR-23.

A paper, Adaptive and Explainable Deployment of Navigation Skills via Hierarchical Deep Reinforcement Learning, written by Kyowoon Lee, Seongun Kim and Jaesik Choi, is accepted at ICRA-23.

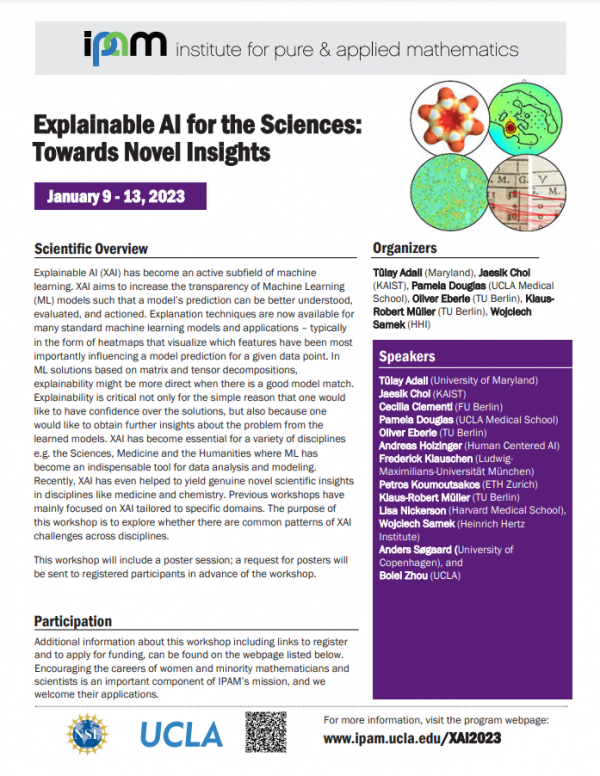

Prof. Choi presented the work at IPAM XAI Workshop held in UCLA. Explainable AI for the Sciences: Towards Novel Insights