XAI Center

XAI Center Homepage

The XAI center is developing new or modified machine learning techniques to produce explainable AI models. The explainable AI models provide effective explanation for human to understand the reasons of decision making via machine learning and statistical inference based on real-world data. The center highly contributes to Medical and Financial industry, which obtains high advantage by adopting AI but high risk follows without explainability.

Explainable AI for Weather Forecast AI Models

In this project, we are focused on designing an explainable AI algorithm, grounded in user experiments, alongside an intuitive human-computer interaction (HCI) interface tailored for forecasters. We aim to demystify AI decision-making processes in weather prediction, enhancing trust and usability for professionals in the field.

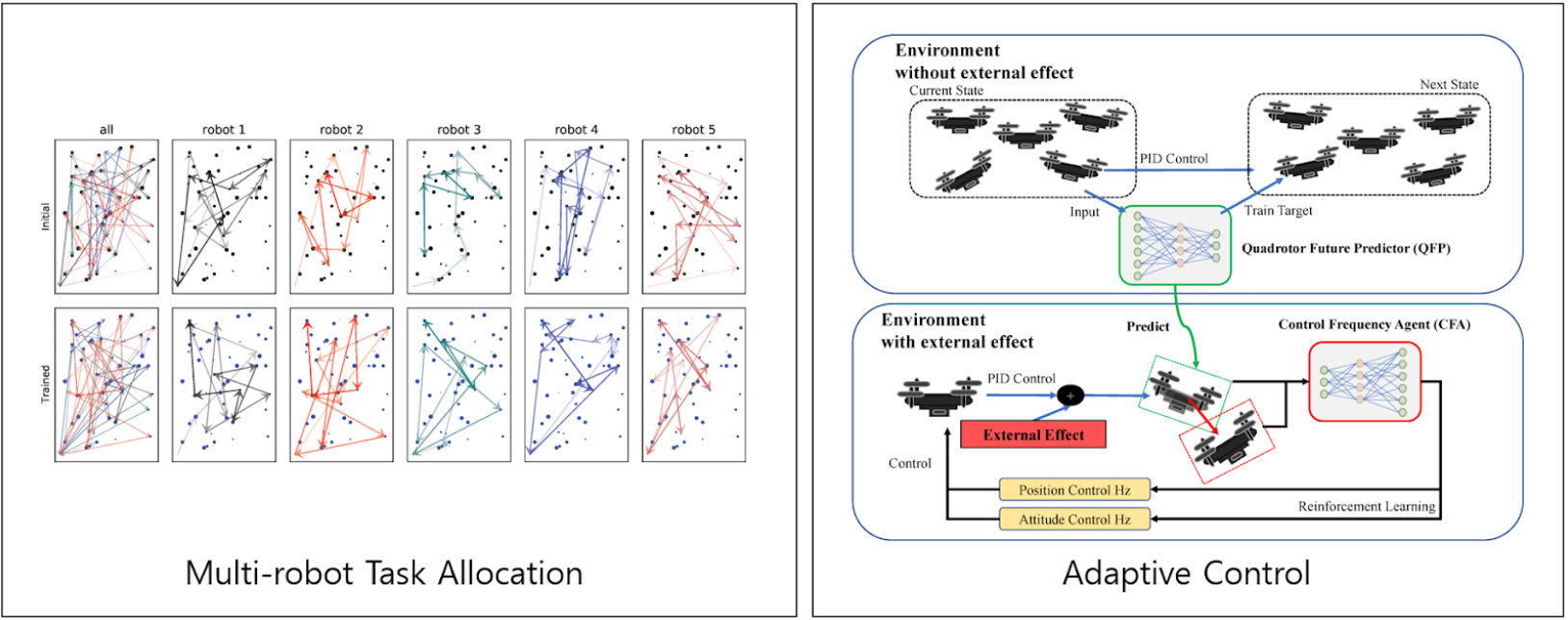

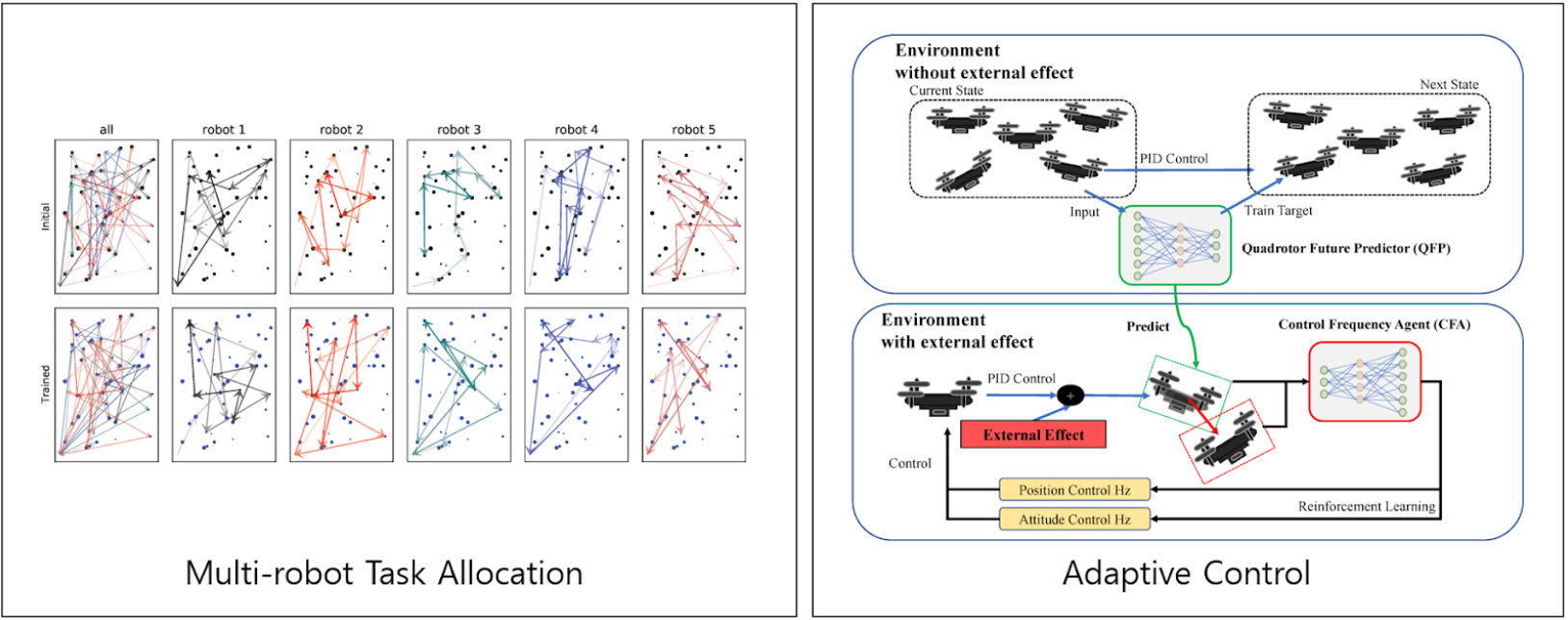

Unmanned Swarm Cyber Physical System

In this project, we develop reinforcement learning algorithms tailored for the seamless navigation and operation of drones and mobile robots. The main goals include crafting adaptive control strategies that stand resilient against the unpredictable shifts in dynamics, such as variations in payload and the challenges posed by changing wind conditions. A significant pillar of this project is the development of advanced multi-agent systems, designed to facilitate seamless cooperation among robots, enabling them to tackle complex tasks in unison. Through this project, we aim to push the boundaries of robotic capabilities, enhancing their autonomy and efficiency in dynamic environments.

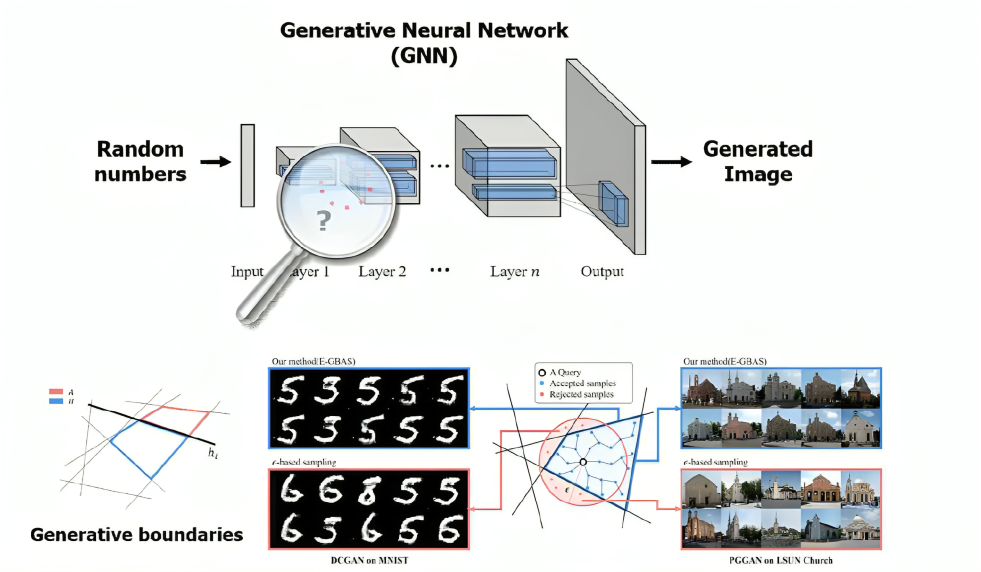

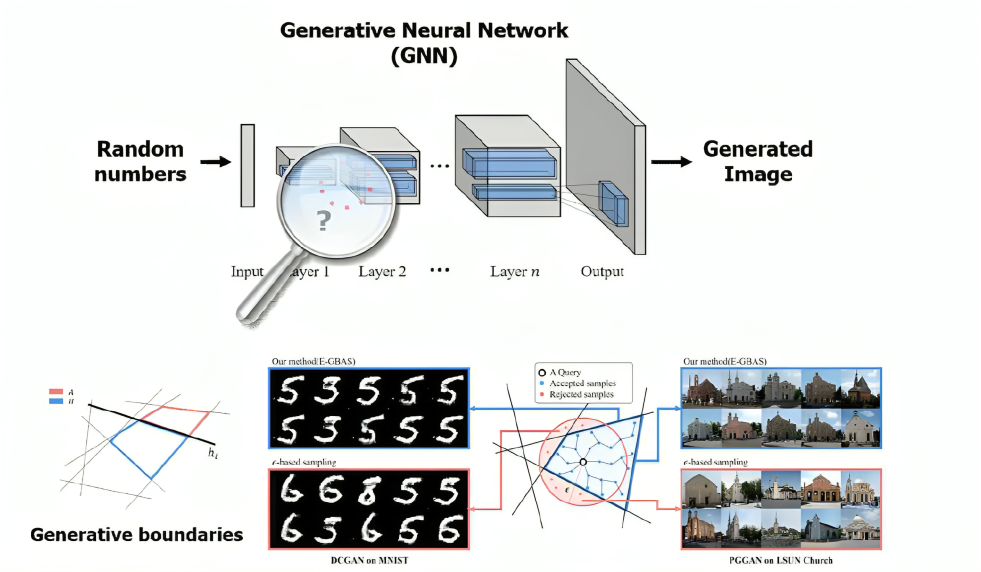

ADD-XAI

This project aims to automatically detect and uncover bugs in deep learning models, enhancing their robustness and reliability. In the initial phase, we focused on debugging the internals of adversarial generative deep neural networks. We achieved this by extracting samples with similar characteristics and then repairing and detecting mis-trained nodes in an unsupervised manner. The scope of this research has since expanded to encompass general deep neural networks.

Explainable AKI Prediction and Prevention System

In this project, we develop an AI-driven system aimed at forecasting Acute Kidney Injury (AKI) in hospitalized patients. This system not only predicts potential AKI incidents but also employs eXplainable AI (XAI) techniques to illuminate modifiable risk factors for attending physicians. By providing actionable insights, the system empowers healthcare professionals to implement preventative measures, thereby aiming to lower the incidence rate of AKI through timely intervention.

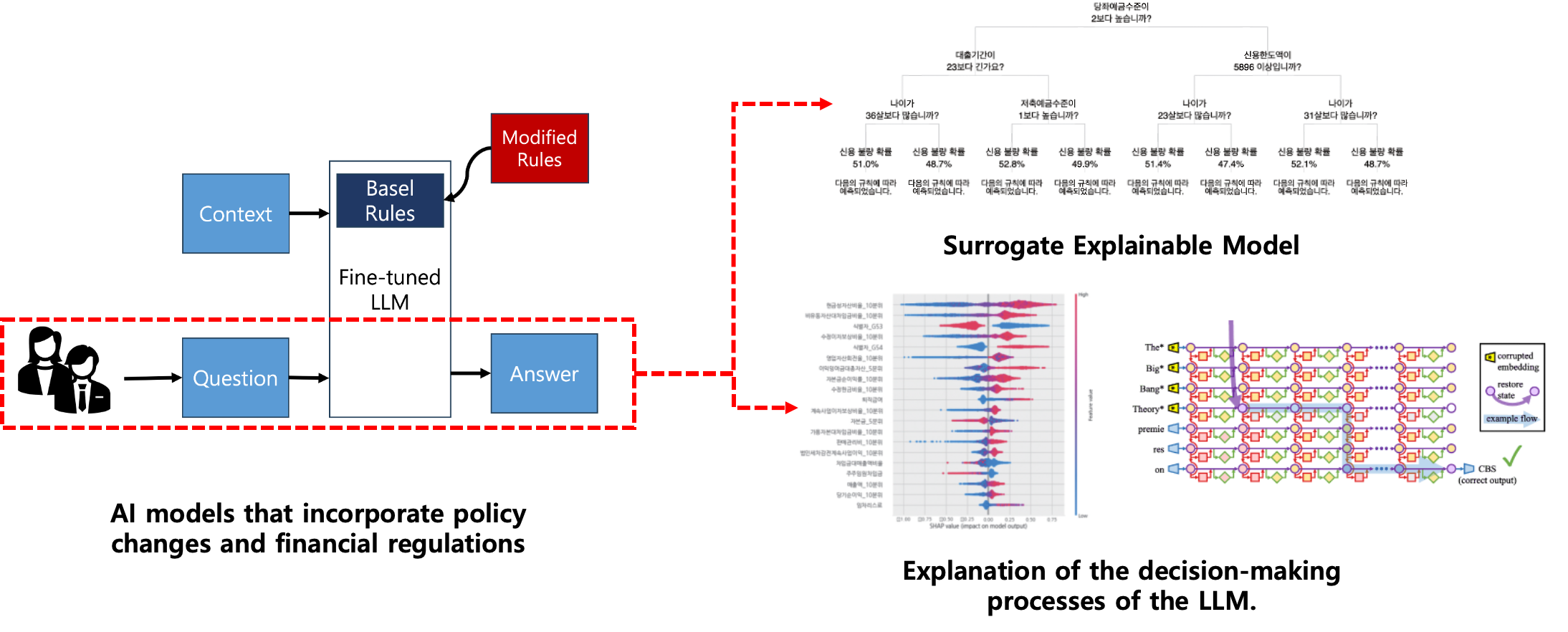

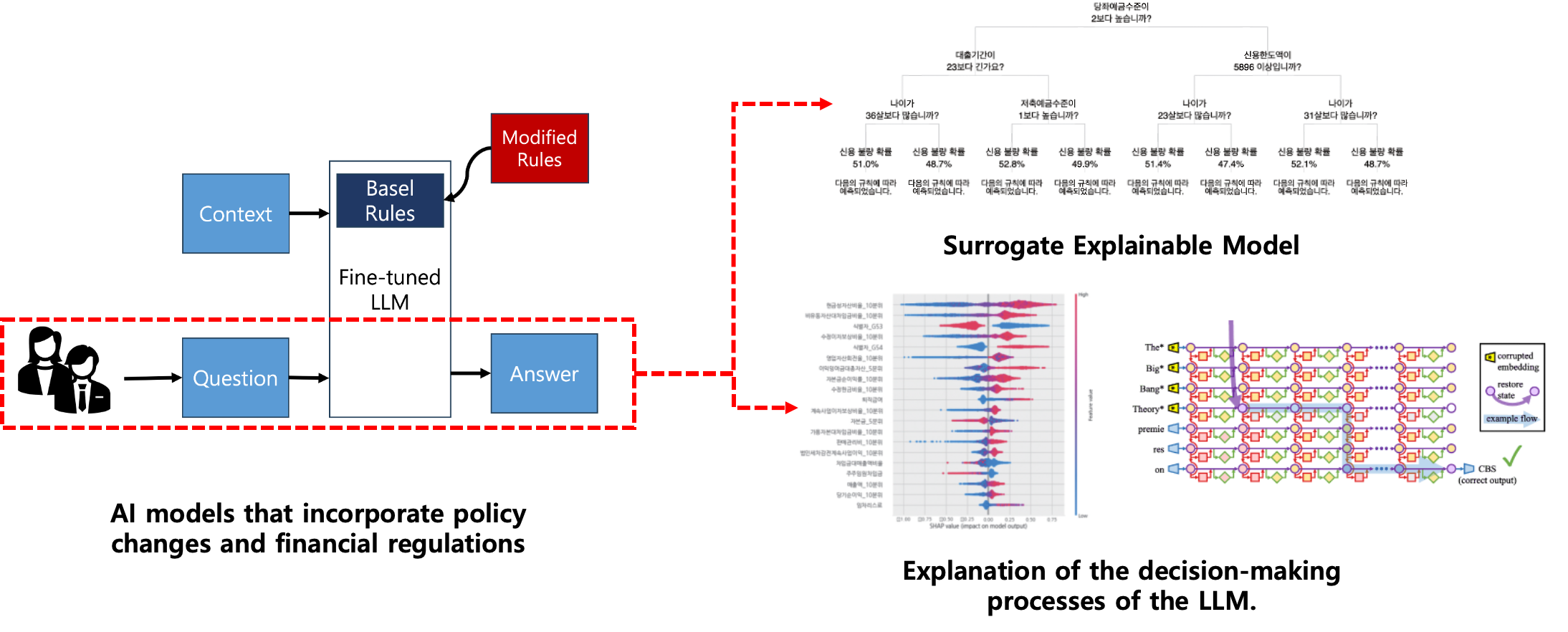

AI-DEP: Explainable AI Models Incorporating Policy Changes and Financial Regulations

Financial-related policies and regulations are amended and established according to changing times or economic conditions. In this project, we develop explainable AI models that make decisions by reflecting these changing policies and financial regulations (e.g., Basel Accords).

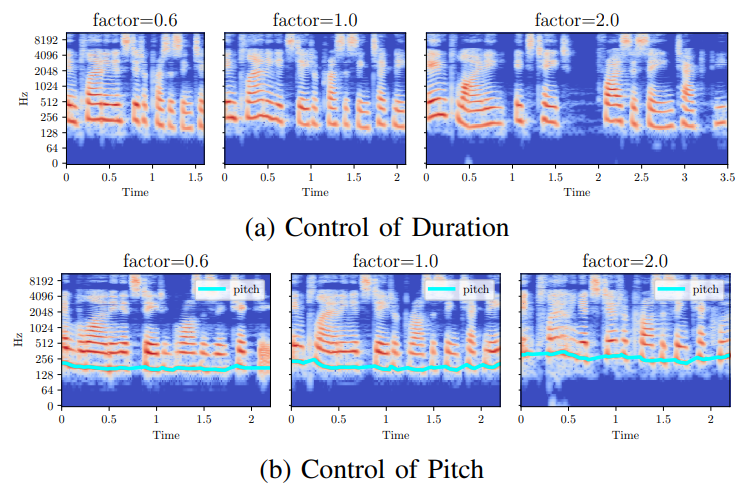

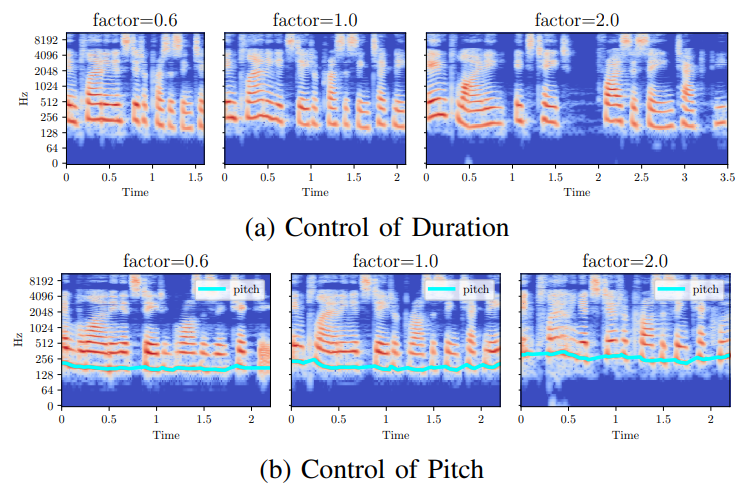

Samsung: Correction of Prosody and Mispronunciations in TTS Models via Counterfactual Neural Activations

The goal of this project is to develop a model-agnostic method that employs counterfactual neural activations to manipulate prosody and pronunciation in TTS models. This solution not only effectively adjusts prosodic features while maintaining high speech intelligibility but also corrects mispronunciations without compromising synthesis quality. Additionally, this approach enables post-hoc adjustments to speech output, allowing TTS models to be adapted to new requirements without the need for retraining.

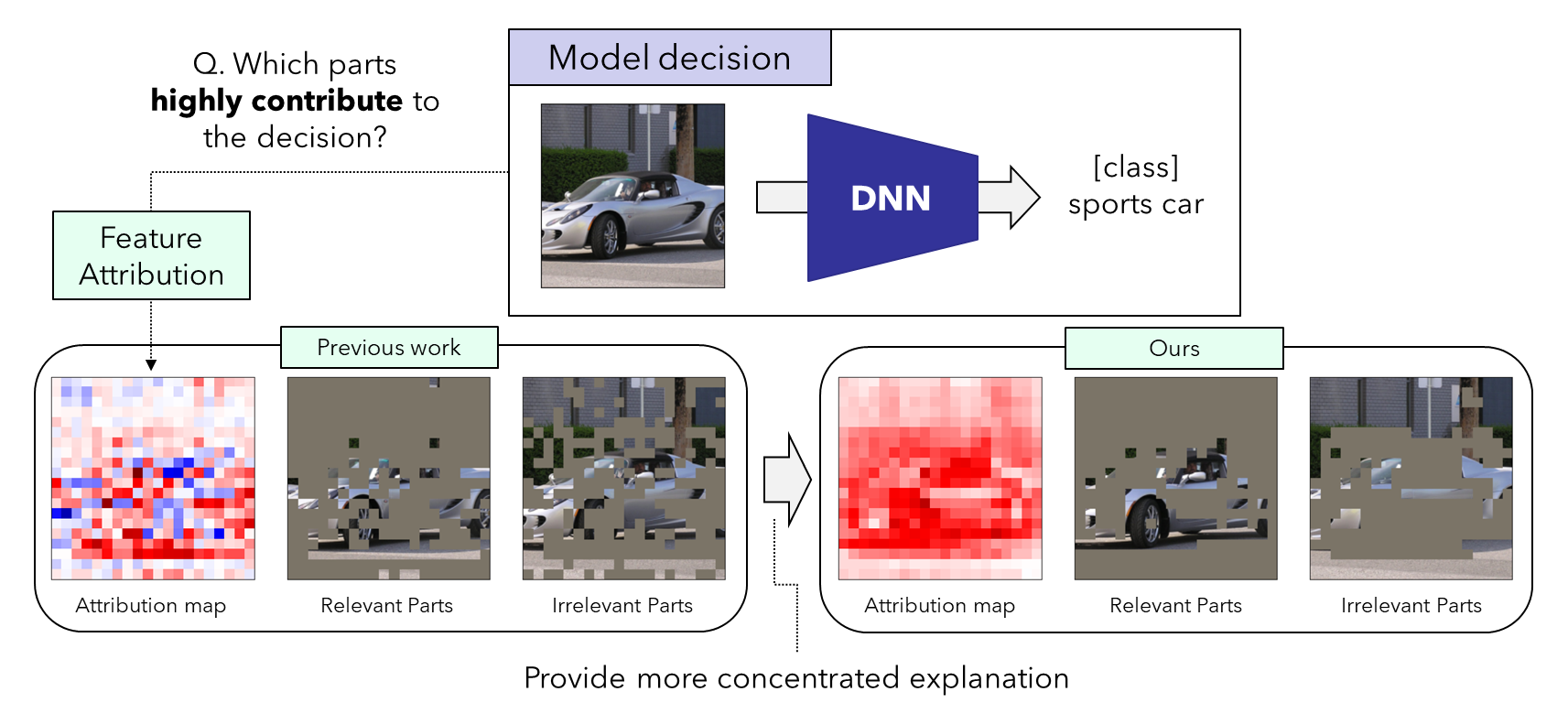

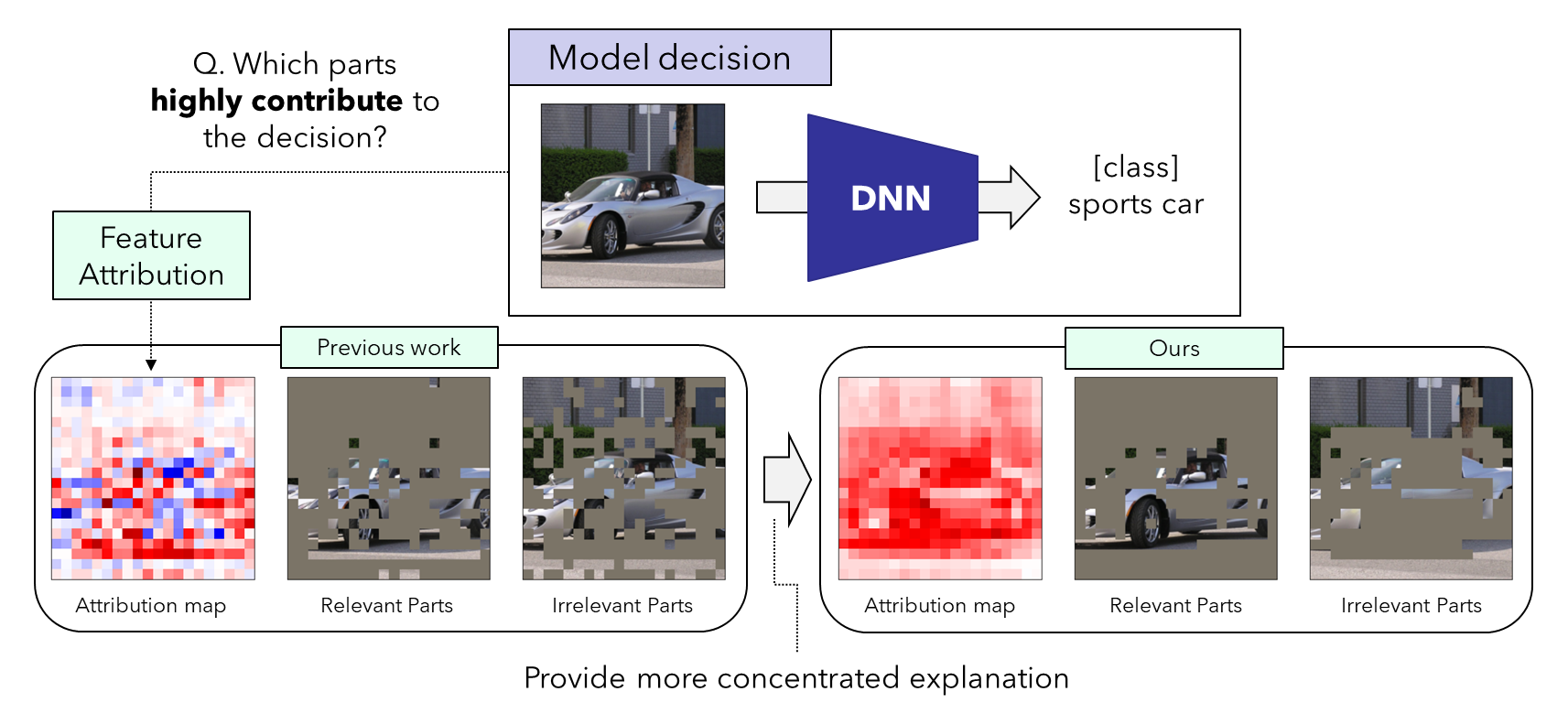

HYUNDAI: Accurate Feature Attribution for Model Evaluation

Understanding which features significantly influence a model’s decisions is critical for evaluating complex black-box models. This project aims to provide more accurate feature attribution in deep learning explanations. We identify limitations in traditional methods, such as those based on Shapley values, and propose a new attribution rule. Our approach offers more concentrated explanations by accounting for feature interactions, grounded in game theory principles.

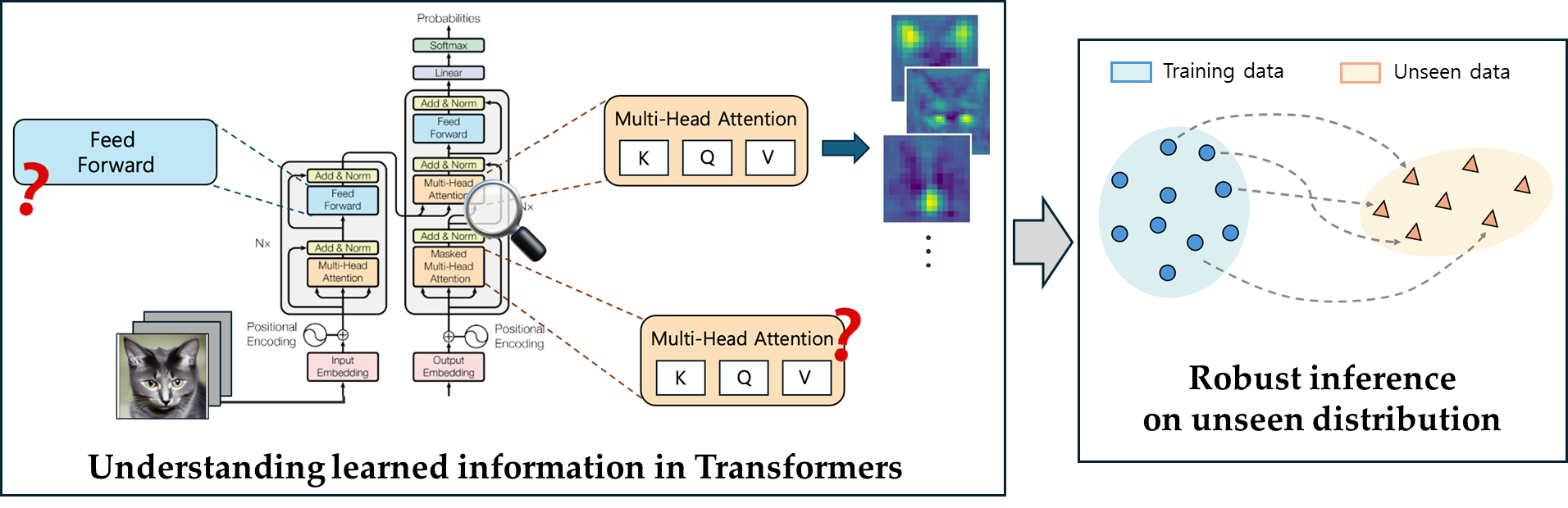

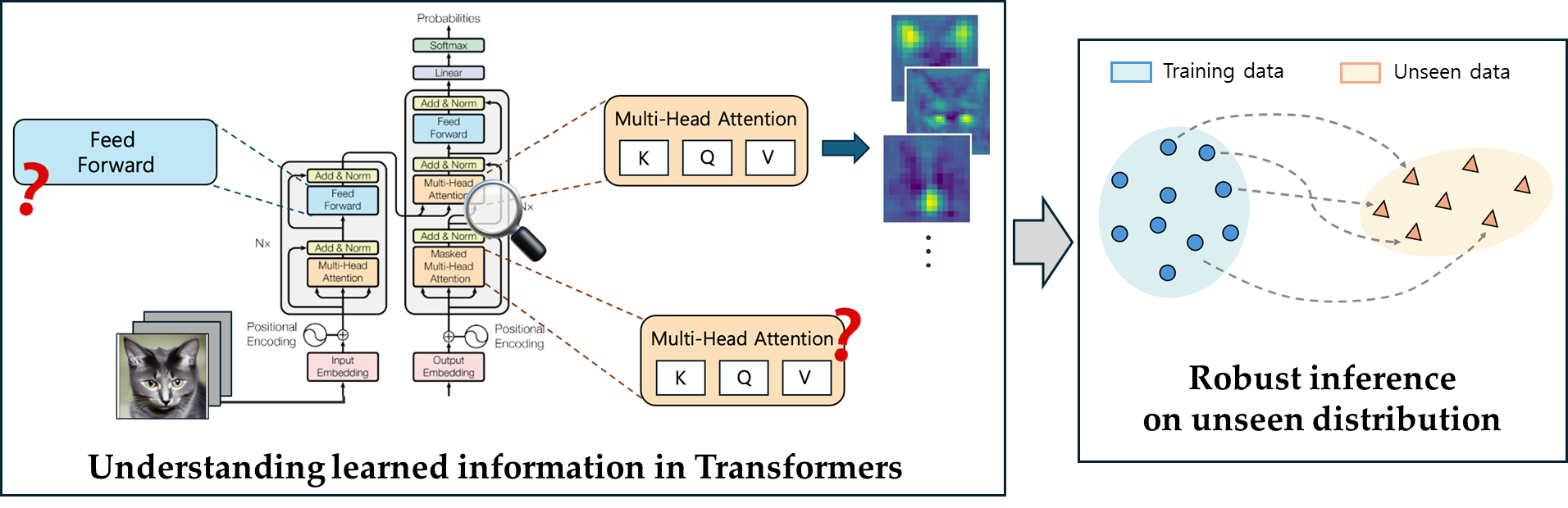

Breakthrough in Neural Scaling Law

This project aims to address the limitations of neural scaling laws by developing methods for robust inference using the knowledge captured by Transformer models. The first phase focuses on understanding and explaining the relationships between the learned information within Transformer modules. In the second phase, the goal is to create algorithms that enable accurate inference on unseen data without further training, by effectively integrating existing knowledge with new information.

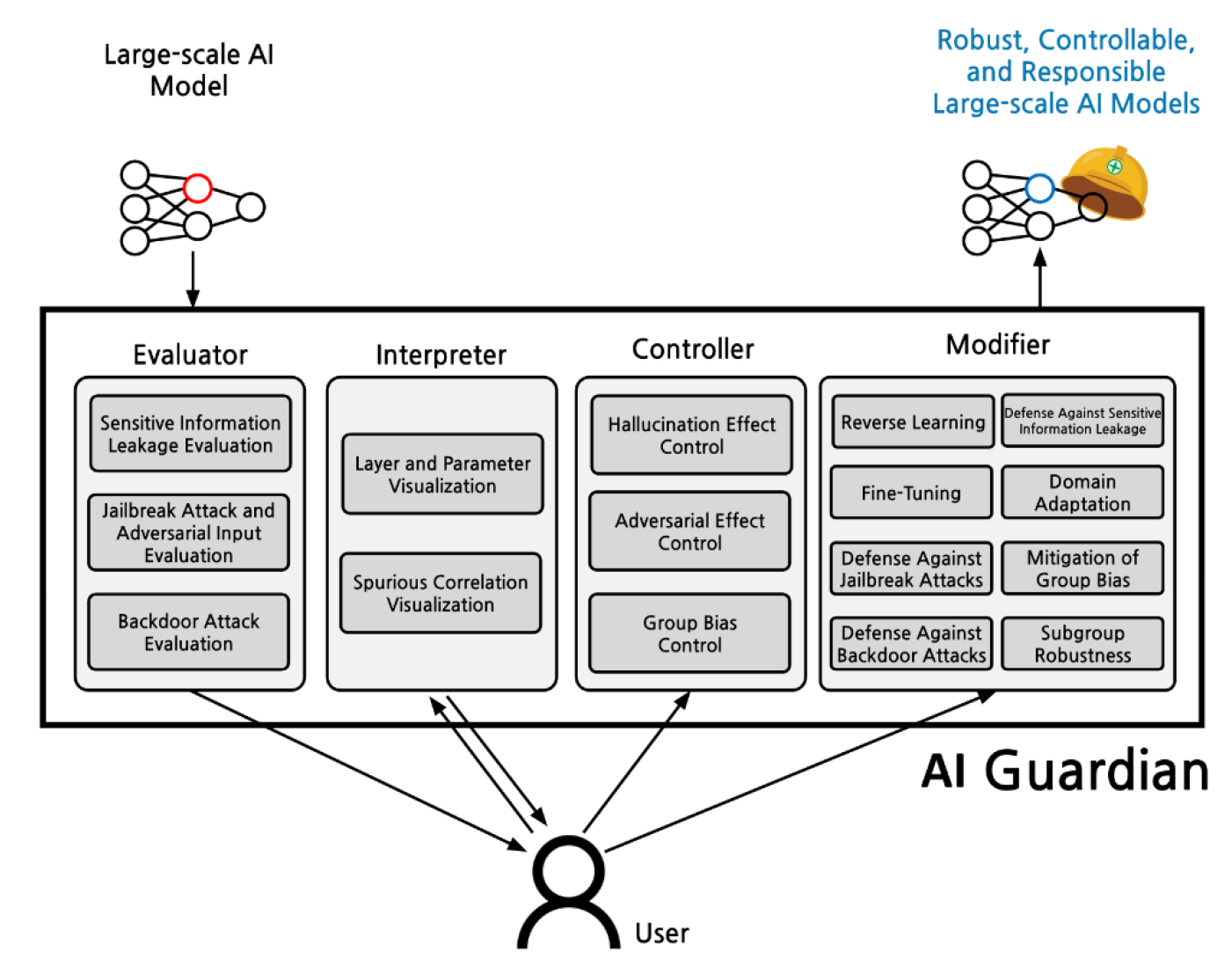

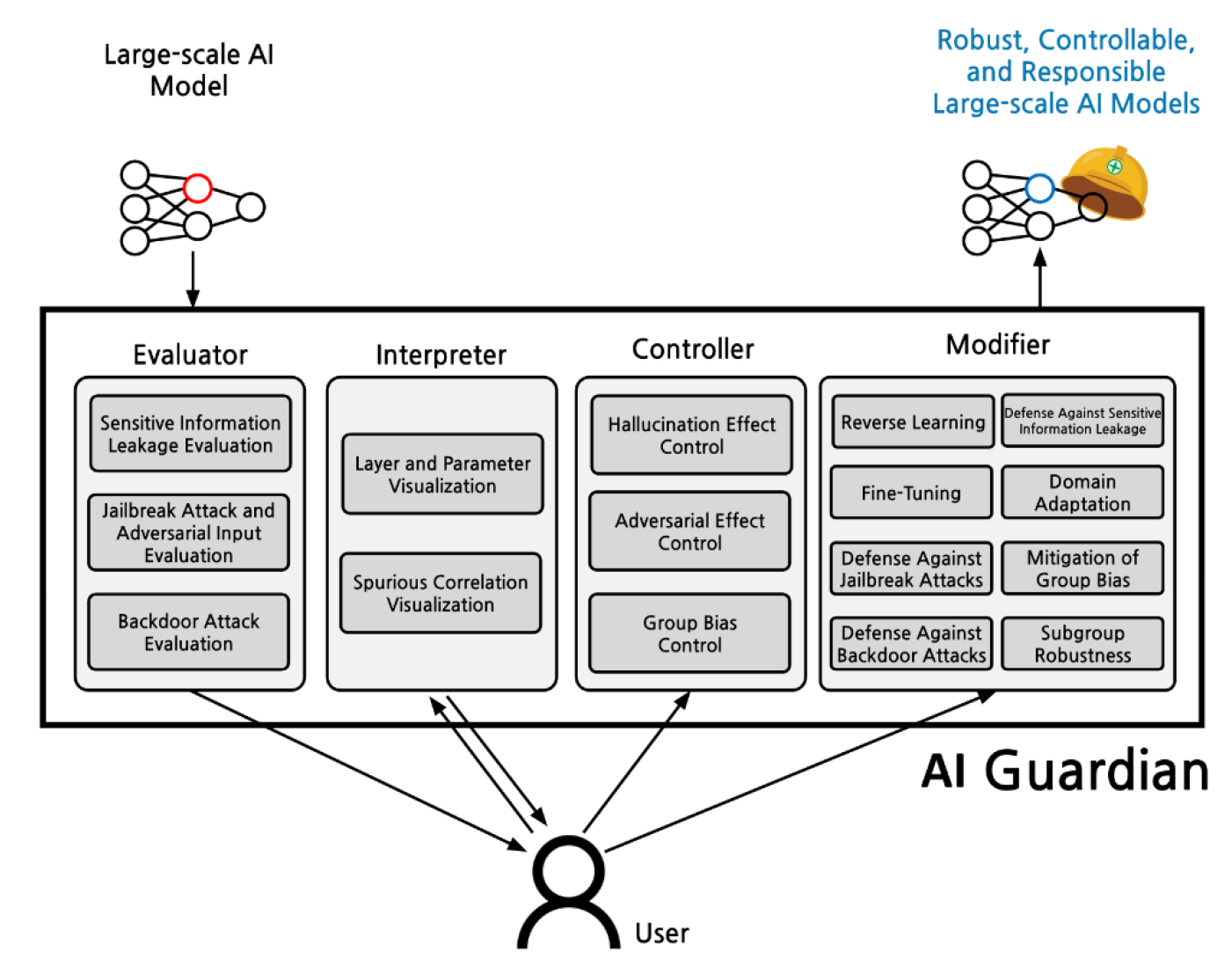

AI Guardians: Development of Robust, Controllable, and Unbiased Trustworthy AI Technology

The goal of this project is to develop robust, controllable, and unbiased trustworthy AI technology. To achieve this, three main research directions have been established: First, building predictable and stable systems through the development of robust and controllable trustworthy AI; second, minimizing AI bias to enhance fairness in trustworthy AI technology; and third, implementing AI that ensures consistent performance across diverse environments through the establishment of international cooperative bodies and synthetic data methodologies.

Projected are supported by