Automatic Correction

Automatic Correction of Internal Units

in Generative Neural Networks

Ali Tousi*, Haedong Jeong*, Jiyeon Han, Hwanil Choi and Jaesik Choi**

KAIST, UNIST and INEEJI

* Both authors contributed equally to this research.

** Corresponding Author

Generative Adversarial Networks (GANs) have shown satisfactory performance in synthetic image generation by devising complex network structure and adversarial training scheme. Even though GANs are able to synthesize realistic images, there exists a number of generated images with defective visual patterns which are known as artifacts. While most of the recent work tries to fix artifact generations by perturbing latent code, few investigate internal units of a generator to fix them. In this work, we devise a method that automatically identifies the internal units generating various types of artifact images. We further propose the sequential correction algorithm which adjusts the generation flow by modifying the detected artifact units to improve the quality of generation while preserving the original outline. Our method outperforms the baseline method in terms of FID-score and shows satisfactory results with human evaluation.

Contribution

- We compile a large dataset of curated flawed generations and provide a comprehensive analysis on artifact generations.

- We identify defective units in a generative model by measuring the intersection-over-union (IoU) of the unit’s activation map and pseudo artifact region masks obtained by training a simple classifier on our dataset.

- We propose an artifact removal method by globally ablating defective units which enhances the quality of artifact samples while maintaining normal samples from drastic change. We further improve the approach by sequentially ablating the defective units throughout consequent layers.

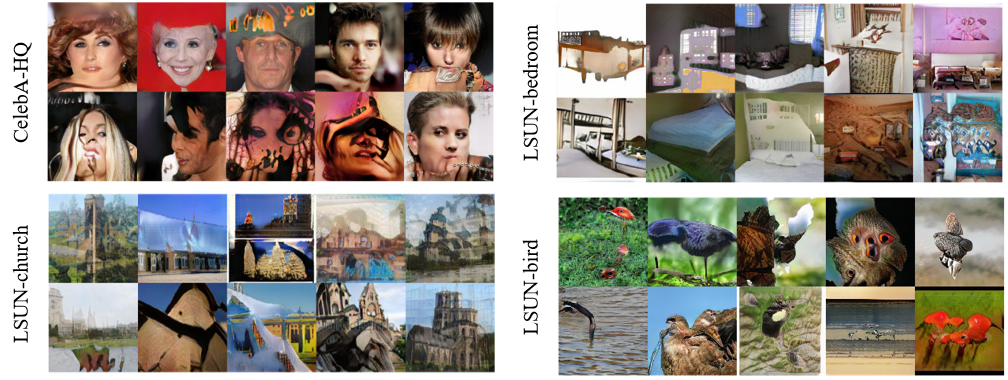

Artifacts in GANs

The term artifact has been used in the previous work to describe the synthesized image which have unnatural (or undesired) visual patterns. Below figure shows illustrative examples of artifacts in the PGGAN with various dataset.

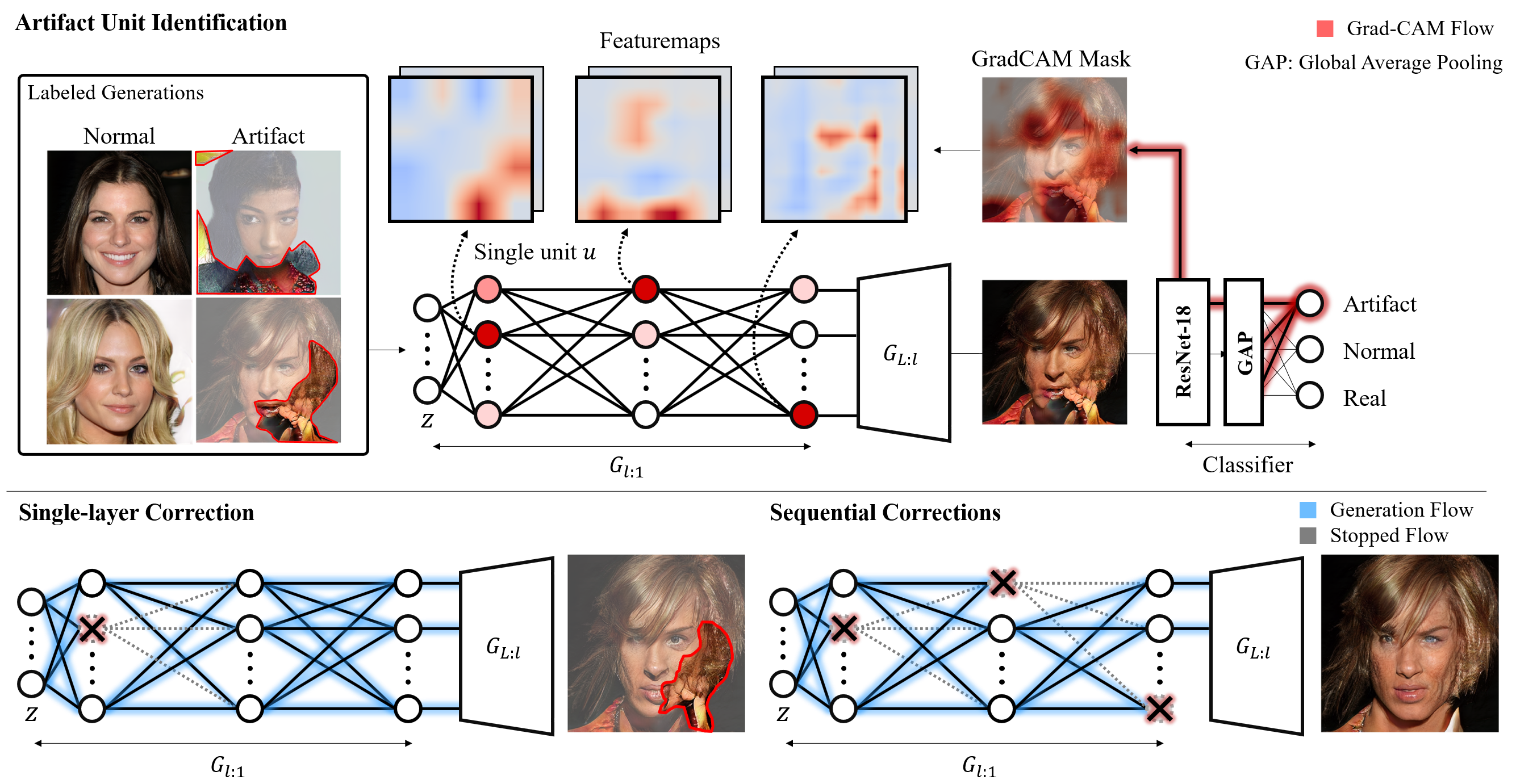

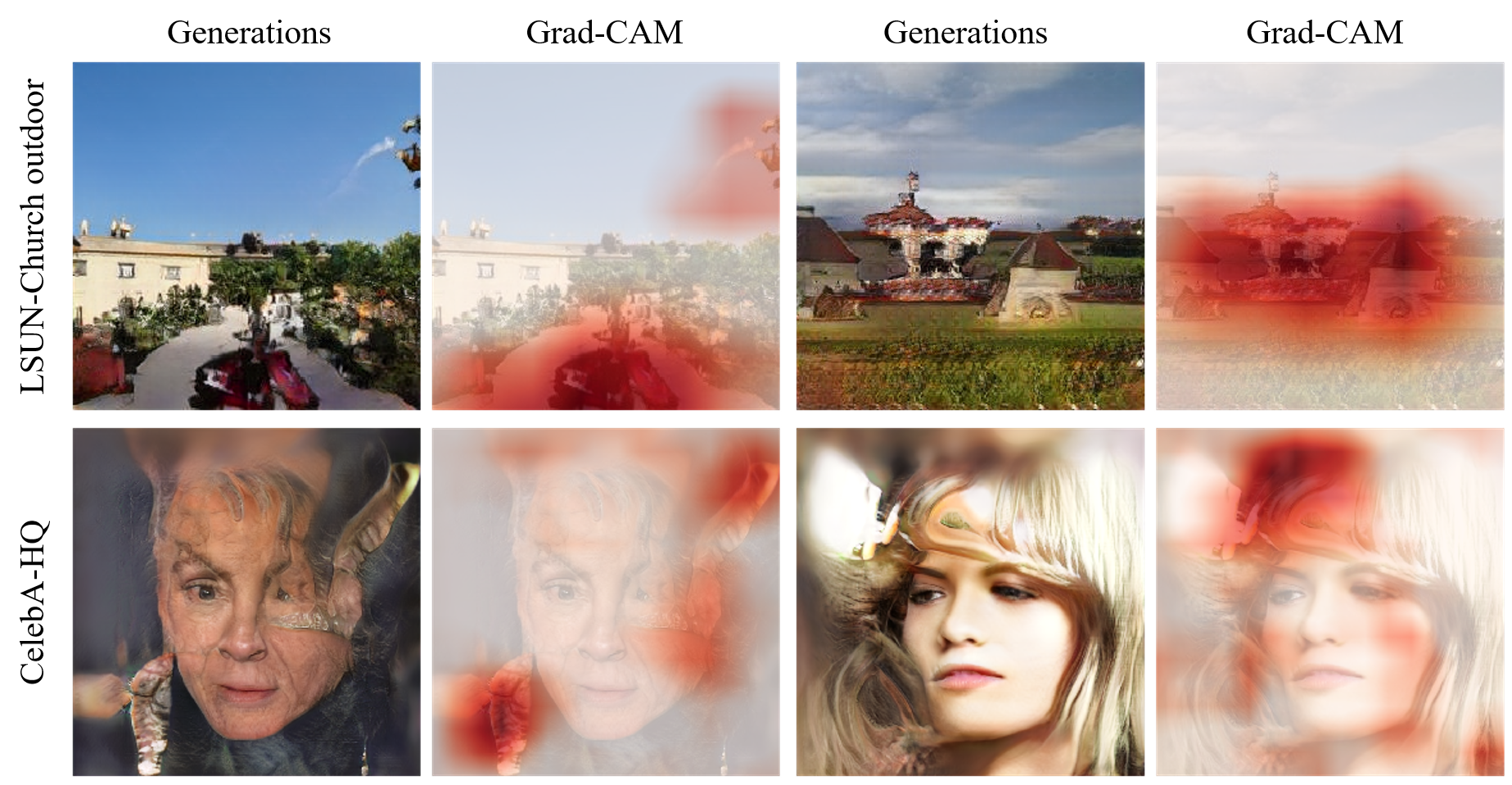

Classifier-Based Artifact Unit Identification

In order to identify the internal units which cause high-level semantic artifacts, we hand-label 2k generated images to normal or artifact generations. Then we build a model to classify our dataset into three categories, namely: artifact, normal and randomly selected real samples. We employ image classifier (e.g. ResNet-18) as our feature extraction module and introduce one fully connected layer on top of it for classification. After training, we apply Grad-CAM to provide us with a mask for the defective region in each generation.

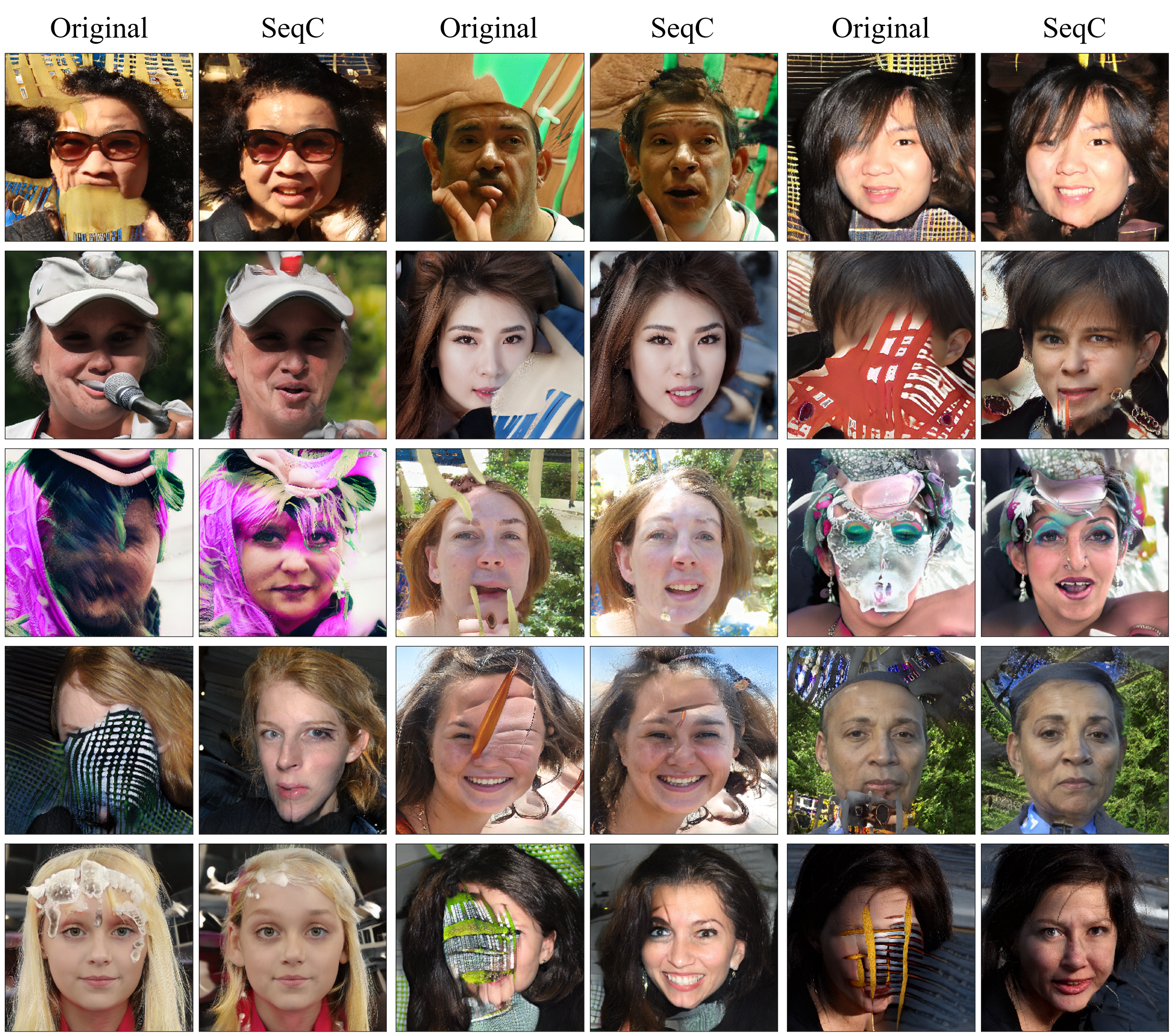

Sequential Correction

We propose the sequential correction to improve the quality of generation performance by suppressing the side-effect of one layer ablations. In sequential correction, we adjust the activation of units in the consequent layers. Below figure shows the qualitative results for each correction method. FID indicates the existed method and DS (Ours) indicates one layer ablation with classifier-based unit detection and SeqC (Ours) indicates consequent layer ablation with classifier-based unit detection.

Generalization

Although the proposed method is performed on the generator with a conventional structure, the same approach with minor modification can be generalized for the recent state-of-the art generators such as StyleGAN2.

Citation

Ali Tousi*, Haedong Jeong*, Jiyeon Han, Hwanil Choi and Jaesik Choi, Automatic Correction of Internal Units in Generative Neural Networks, In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR’21).

(*Equal contribution)

Bibtex

@inproceedings{tousi2021automatic,

title={Automatic Correction of Internal Units in Generative Neural Networks},

author={Tousi, Ali and Jeong, Haedong and Han, Jiyeon and Choi, Hwanil and Choi, Jaesik},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={7932–7940},

year={2021}

}

Acknowledgement

This work was supported by Institute for IITP grant funded by the Korea government (MSIT) (No.2017-0-01779, XAI and No.2019-0-00075, Artificial Intelligence Graduate School Program (KAIST)).